The Motivation: Reducing Storage Overhead

EigenDA validators store encoded blob chunks proportionally to their stake. When a blob is dispersed to multiple quorums, validators participating in multiple quorums need to store chunks for each one.

The Problem: If we assign chunks independently per quorum, a validator with:

-

5% stake in Quorum 0 → stores 5% of chunks

-

15% stake in Quorum 1 → stores 15% of chunks

-

Total storage: 20% of all chunks

The Goal: Can we do better? What if we could reuse the same chunks across quorums, so this validator stores only 15% instead of 20%?

This is exactly what the new assignment algorithm achieves: minimize the number of chunks sent to each validator while preserving the reconstruction guarantee.

The Old Approach: Independent Per-Quorum Assignment

The original assignment algorithm (core/assignment.go) handles multiple quorums by calling GetOperatorAssignment independently for each quorum. Each quorum gets its own separate assignment proportional to the validator’s stake in that quorum. While simple and correct, this approach has no overlap optimization—a validator in multiple quorums stores the sum of all per-quorum allocations.

Note that there may be overlapping between the set of chunks assigned by different quorums, but the old approach does not check for overlap and naively sends both sets of chunks to the validator. The validator would store both, leading to wasted bandwidth and storage.

The New Algorithm: Overlap Optimization

Algorithm Description

The new algorithm uses two key strategies to minimize storage:

-

Chunk Overlap Maximization: When a validator participates in multiple quorums for the same blob, the algorithm reuses the same chunk indices across quorums whenever possible.

-

Reconstruction Capping: Each validator is assigned at most the number of chunks needed to independently reconstruct the blob.

The new algorithm (core/v2/assignment.go) implements overlap optimization and reconstruction capping through the following functions:

1. GetAssignmentsForQuorum (Lines 40-90): Assigns chunks for one quorum as the baseline. The number of chunks assigned to each validator is proportional to their stake in this quorum.

2. AddAssignmentsForQuorum (Lines 99-161): Generates a new quorum assignment that maximizes overlap with the baseline assignment through two phases:

-

Phase 1 - Reuse (Lines 115-136): For each validator, reuse as many indices as possible from the baseline assignment, up to what’s needed for the new quorum.

-

Phase 2 - Fill Gaps (Lines 145-158): Distribute unused indices to validators needing more chunks if they have more percentage of stake in the new quorum than the baseline quorum.

3. MergeAssignmentsAndCap (Lines 167-220) Merges all per-quorum assignments and caps at the reconstruction threshold: max_chunks = NumChunks * CodingRate. Once a validator has enough chunks to reconstruct the blob, additional chunks provide no value. Therefore, capping at the reconstruction threshold can improve the performance without reducing security.

4. GetAssignmentsForBlob (Lines 227-266) Orchestrates the full multi-quorum assignment:

(1) generate assignment for Quorum 0 as the baseline assignment,

(2) for each additional quorum, use AddAssignmentsForQuorum with Quorum 0 as baseline,

(3) merge and cap all assignments.

Optimality Guarantee

The algorithm produces optimal storage assignments for two quorums. For three or more quorums, the assignment is not guaranteed to be globally optimal.

However, since Quorums 0 and 1 are typically the largest quorums (containing most validators), and additional quorums are usually smaller custom quorums, the algorithm achieves near-optimal storage reduction for the majority of validators in practice.

The Storage Savings

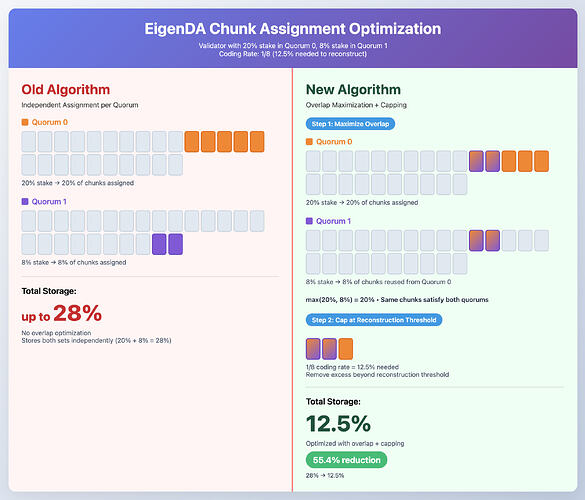

We give a concrete example to show how the new chunk assignment algorithm reduces the chunks assigned to a validator compared to the old one.

Suppose the coding rate is 1/8, i.e., 1/8 of the total chunks can reconstruct the original blob. Suppose a validator has 8% stake in Quorum 0 and 20% stake in Quorum 1.

The old algorithm assigns 28% of the total chunks to this validator. In the new algorithm, the assignment first assigns 20% of chunks (the maximum of the two quorums) by maximizing the overlap. Then, the number of chunks assigned to the validator is further capped at 1/8 (12.5%) since that’s all that’s needed to reconstruct the blob.

Security Guarantee Remains Intact

The new algorithm upholds the essential security property:

Any coalition of validators whose combined stake meets or exceeds the confirmation threshold can fully reconstruct the blob.

In the chunk-overlap maximization part, the optimization does not change the number of chunks assigned to each validator within any quorum. Each validator within one quorum continues to hold a distinct, non-overlapping subset of chunks, exactly as before. Consequently, the reconstruction guarantees for every quorum remain unchanged.

In the chunk-number capping algorithm, excess chunks are removed only from validators who already possess enough data to reconstruct the blob independently. This adjustment ensures no loss of reconstructability while improving efficiency.

Thus, the overall security guarantee of the system is fully preserved.

Conclusion

The new chunk assignment algorithm represents a significant optimization for multi-quorum scenarios. For validators participating in multiple quorums, overlap optimization combined with reconstruction capping can dramatically reduce storage requirements, making EigenDA more efficient while preserving security guarantees.

Further Reading

Chunk Assignment Documentation: https://layr-labs.github.io/eigenda/protocol/architecture/assignment.html